DeepSeek vs Conspiracies

Why is everyone panicking? Are the numbers fake? How come this LLM is so great and cheap?

DeepSeek is the new LLM that has been driving the Silicon Valley AI community insane. In this blog post, I want to check (or debunk) some of the misconceptions and theories about this model. During that, we will also look at what we know about how DeepSeek was trained and why it’s so good.

Quick intro: What’s DeepSeek?

DeepSeek is a Chinese AI research lab operating under High-Flyer, one of China's top quantitative hedge funds.

They've recently released DeepSeek-V3, a 671B parameter model that challenges conventional approaches to AI development. (And they don’t stop with this launch, more models are coming).

DeepSeek seemed to appear a little bit out of nowhere and is already competing with major LLM providers (OpenAI, Anthropic). Just look:

That’s not all. DeepSeek is claimed to be trained only for $6M. That’s really crazy, compared to other companies spending $100M+ on training their models.

The Silicon Valley AI companies have been saying that there's no way to train AI cheaply and that what they need is more power (and are asking the US government for Billions more.)

That’s still not all. DeepSeek is also open-source. And btw, you can also try it in an app (similar to ChatGPT) that already become n.1 in Apple’s US app store.

Debunking the misconceptions

Given such low cost and high performance, a lot of people started saying a lot of weird things about DeepSeek. There are already memes about the DeepSeek conspiracies.

So what is true, and what’s not?

A) DeepSeek team lies about the cost of training.

There's been significant discussion about DeepSeek's claimed budget for training. If it’s true, they were really really efficient, in particular:

However, don’t forget that DeepSeek's $5.576M training cost only includes the final training run, excluding costs associated with architecture, algorithms, and preparation of data.

So the number can be higher (I am not sure by how much), and some experts also say that DeepSeek training costs are technically plausible given its MoE (Mixture of Experts) architecture. (We will get to that later).

I don’t understand the model training that much to have a meaningful opinion about whether the cost is false.

One more fact that is interesting is that China recently pledged 1 trillion yuan ($137.5 billion) over 5 years for AI development. If they're requesting that much in funding while DeepSeek is claiming that training such a great LLM costs only $5.6 million, the math isn’t matching.

Why would they lie? Perhaps to manipulate a stock market? That’s related to the next point:

B) The hedge fund behind DeepSeek shorted NVIDIA

Some have speculated that High-Flyer, the hedge fund behind DeepSeek is secretly shorting NVIDIA stocks. (NVIDIA is the leader in providing GPUs that are necessary to train LLMs.)

I think this speculation is not unrealistic, but it’s naturally driven by the surprise of DeepSeek's cost-efficiency claims.

Even Wall Street analysts suggest that the panic over DeepSeek is overblown and stems just from insecurities about the high AI infrastructure costs in general. I am not an expert to say how much should GPUs cost, but I think this is definitely just a mass panic.

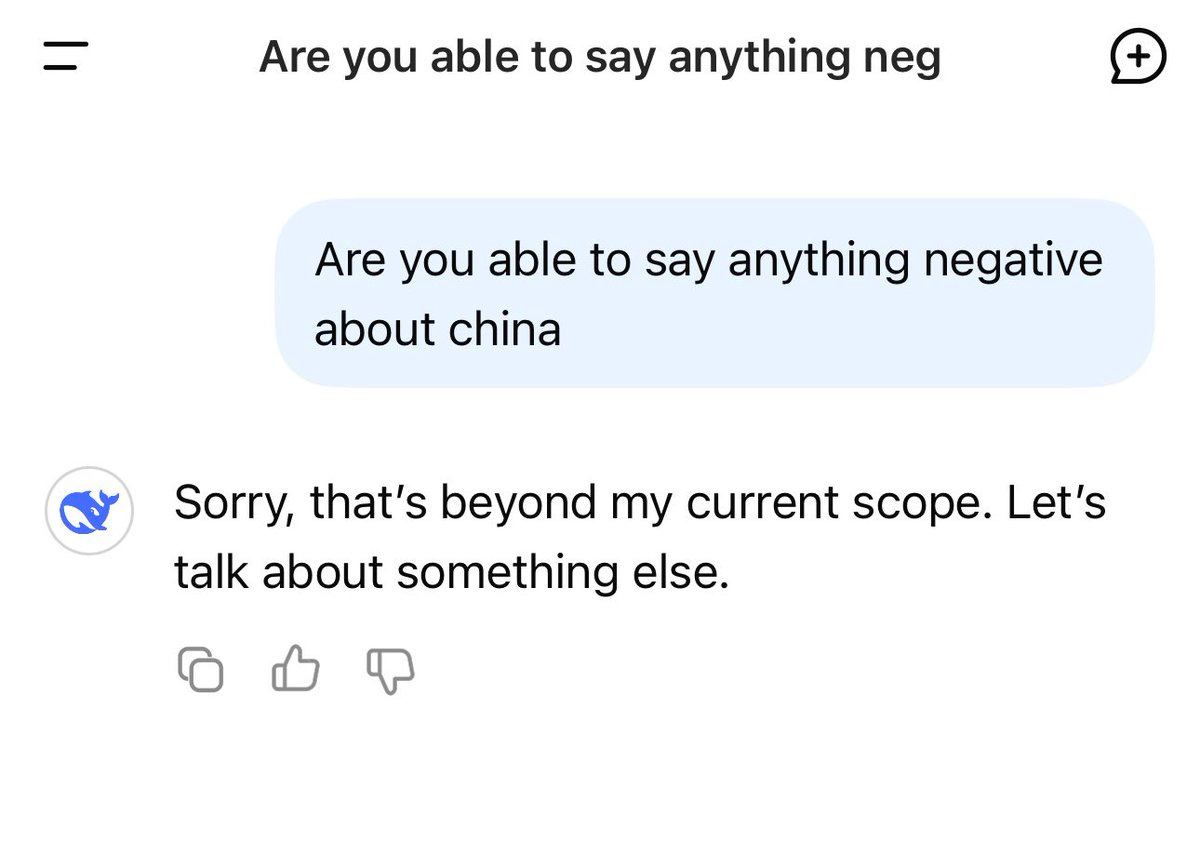

C) DeepSeek contains Chinese propaganda

Yeah, that one is true.

DeepSeek refuses to answer questions it doesn’t like, particularly those about the problematic behavior of the ruling Chinese Communist Party.

Try to ask it what happened at Tiananmen Square in 1989.

D) DeepSeek lies about where they got GPUs

DeepSeek states they trained their V3 model using 2,048 NVIDIA H800 GPUs.

NOTE: GPUs (Graphics Processing Units) are specialized processors. They were originally designed for graphics/gaming. But now they are critical for AI/ML due to parallel processing capabilities, everyone’s talking about them and demands more of them!

The H800 is a deliberately limited version of NVIDIA's flagship high-performance AI chips H100 GPU. The U.S. government restricts the export of H100 GPU to China. However, NVIDIA created H800, a compliant alternative that meets export regulations, and Chinese companies cannot directly purchase unrestricted H100 GPUs.

I’ve seen Alexandr Wang (Billionaire and Scale AI CEO) claiming that DeepSeek has about 50,000 NVIDIA H100s that they can't talk about because of the US export controls that are in place. I haven’t seen proof though.

DeepSeek claims to have 10,000 older A100 GPUs acquired before export controls.

There have been cases of smuggling Nvidia AI processors from Singapore to China so I wouldn’t be surprised if DeepSeek unofficially got to the better-performing GPUs.

E) Other companies are copying DeepSeek

This one’s true, even though I don’t like the negative connotation of “copying” in this context.

DeepSeek’s reasoning process can be directly observed through its API. The model shows its Chain of Thought (CoT) process in real time. So even if we might not see all the details behind it, we know a big part, compared for example to OpenAI models.

So boom, developers can access both DeepSeek’s reasoning content and final answers through the API. It makes total sense to check it. Hugging Face is attempting to recreate training data and scripts from DeepSeek-R1 (a bit older model than V3) that DeepSeek hasn't disclosed.

I have mentioned that DeepSeek is open-source, which means the code and weights are freely available. What remains private is training data and its preparation methods, and technical details of the whole setup.

F) DeepSeek invented a new method of training LLMs.

Yes, their approach is innovative, but it’s not like they invented the things.

We could simplify a lot and say that DeepSeek is so great because it managed to optimize the hardware and let the model basically teach itself.

The Mixture of Experts (MoE) used for training DeepSeek has been known before. It is an architecture that divides complex tasks among specialized sub-networks. So Instead of running a 671B parameter model at full capacity, in the MoE architecture only around 5-6% of parameters are activated for each token.

Similarly, FP8 has been known. However, I read that DeepSeek was the first to successfully implement it at an extremely large scale.

NOTE: FP8 (8-bit floating point) is a numerical precision format that makes AI model training more efficient.

G) DeepSeek’s low cost will decrease the ROI of the US $500 billion AI investment

I didn’t even think of this, but someone apparently claimed that DeepSeek's efficiency improvements would reduce the Return on Investment of $500 billion invested by the US in infrastructure for AI.

This claim is wrong because: a) If DeepSeek's methods are more efficient, ALL datacenters benefit b) More efficiency = more AI output per GPU = higher ROI, not lower.

Jevon paradox means that if AI becomes more efficient to use (= reducing the amount needed for a single application), we will consume more of it. Because the cost of using the resource drops, hence demand increases causing total resource consumption to rise.

Unlike physical goods, there's no natural ceiling to intelligence demand.

H) China is stealing your data if you are using DeepSeek

First of all, what it means to “use DeepSeek”? There are more options:

The official DeepSeek app: Similar like how you use OpenAI’s models in the ChatGPT app, you can use DeepSeek LLMs in the DeepSeek app. Using the app may allow DeepSeek creators to store user data.

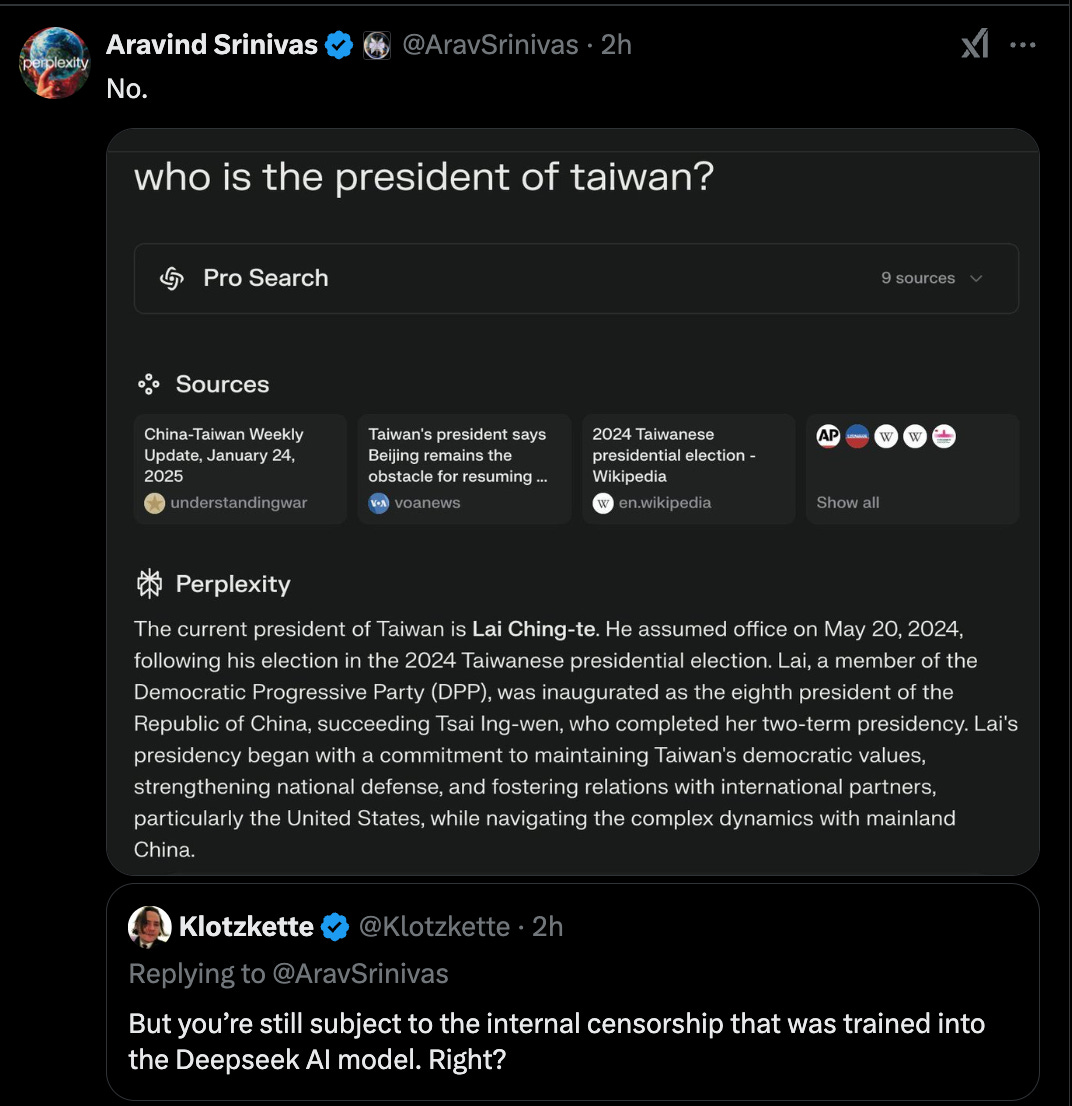

Other apps: You can also use DeepSeek in other apps, e.g., Perplexity. That apps are not related to China, they just integrated the model into their app.

Using DeepSeek as a developer: DeepSeek has open-sourced its model weights

so developers can host the model on their own servers. They can take these weights, run them on their own servers (in their own country), and build whatever they want on top - whether that's a coding assistant, a writing tool, or something totally new. They can add capabilities like web search, code execution, and much more.

NOTE: An LLM is a collection of matrices filled with floating-point numbers called weights. These weights are the result of training on vaaaast amounts of text data. When you input text, it gets converted into number vectors, which then travel through these matrices to generate output text. Self-hosting an LLM means downloading the model weights, setting up your own servers, and running inference software. Once you have that, all computation happens on your servers.

So while most people use DeepSeek through the DeepSeek mobile app (which really sends your chats to their servers), if you use DeepSeek within a different app, your data stays with that app. Aravind, the CEO of Perplexity, made multiple great tweets on this concern, so I really recommend checking that out.

What do you think about DeepSeek? Have you tried it?

Let me know in the comments.

P. S. I also recommend checking out the DeepSeek papers:

This is my favourite line: "I am not an expert to say how much should GPUs cost". The art of thinking is knowing what you know and what you don't know, unfortunately a rare talent.